Data Engineering with Apache Spark

Build scalable data processing pipelines using Apache Spark for big data analytics and real-time stream processing

Course Overview

Expected Outcomes

Pipeline Development

Performance Optimization

Stream Processing

Data Quality

Technical Stack

Core Framework

Data Processing

Infrastructure

Development Environment

Students work with professional development setups including Databricks Community Edition, local Spark clusters, and cloud-based environments. The curriculum emphasizes version control with Git, code review practices, and collaborative development workflows used in engineering teams.

Projects incorporate CI/CD pipelines for automated testing and deployment of Spark applications. Participants gain experience with Jupyter notebooks for exploratory analysis, unit testing frameworks for data transformations, and logging patterns for production debugging.

Who Should Attend

Ideal For

Prerequisites

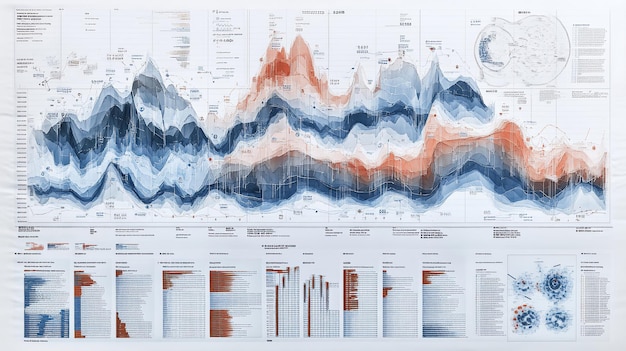

Skill Development Tracking

Technical Assessments

Performance Metrics

Explore Other Programs

Start Your Spark Journey

Join data engineers building production-grade processing pipelines for enterprise analytics systems in Tokyo

Request Course Information